Subsections of Getting Started

CRS Installation

This guide aims to get a CRS installation up and running. This guide assumes that a compatible ModSecurity engine is already present and working. If unsure then refer to the extended install page for full details.

Downloading the Rule Set

The first step is to download CRS. The CRS project strongly recommends using a supported version.

Official CRS releases can be found at the following URL: https://github.com/coreruleset/coreruleset/releases.

For production environments, it is recommended to use the latest release, which is v4.0.0. For testing the bleeding edge CRS version, nightly releases are also provided.

Verifying Releases

Note

Releases are signed using the CRS project’s GPG key (fingerprint: 3600 6F0E 0BA1 6783 2158 8211 38EE ACA1 AB8A 6E72). Releases can be verified using GPG/PGP compatible tooling.

To retrieve the CRS project’s public key from public key servers using gpg, execute: gpg --keyserver pgp.mit.edu --recv 0x38EEACA1AB8A6E72 (this ID should be equal to the last sixteen hex characters in the fingerprint).

It is also possible to use gpg --fetch-key https://coreruleset.org/security.asc to retrieve the key directly.

The following steps assume that a *nix operating system is being used. Installation is similar on Windows but likely involves using a zip file from the CRS releases page.

To download the release file and the corresponding signature:

wget https://github.com/coreruleset/coreruleset/archive/refs/tags/v4.0.0.tar.gz

wget https://github.com/coreruleset/coreruleset/releases/download/v4.0.0/coreruleset-4.0.0.tar.gz.asc

To verify the integrity of the release:

gpg --verify coreruleset-4.0.0.tar.gz.asc v4.0.0.tar.gz

gpg: Signature made Wed Jun 30 10:05:48 2021 -03

gpg: using RSA key 36006F0E0BA167832158821138EEACA1AB8A6E72

gpg: Good signature from "OWASP CRS <security@coreruleset.org>" [unknown]

gpg: WARNING: This key is not certified with a trusted signature!

gpg: There is no indication that the signature belongs to the owner.

Primary key fingerprint: 3600 6F0E 0BA1 6783 2158 8211 38EE ACA1 AB8A 6E72

If the signature was good then the verification succeeds. If a warning is displayed, like the above, it means the CRS project’s public key is known but is not trusted.

To trust the CRS project’s public key:

gpg --edit-key 36006F0E0BA167832158821138EEACA1AB8A6E72

gpg> trust

Your decision: 5 (ultimate trust)

Are you sure: Yes

gpg> quit

The result when verifying a release will then look like so:

gpg --verify coreruleset-4.0.0.tar.gz.asc v4.0.0.tar.gz

gpg: Signature made Wed Jun 30 15:05:48 2021 CEST

gpg: using RSA key 36006F0E0BA167832158821138EEACA1AB8A6E72

gpg: Good signature from "OWASP CRS <security@coreruleset.org>" [ultimate]

Installing the Rule Set

Once the rule set has been downloaded and verified, extract the rule set files to a well known location on the server. This will typically be somewhere in the web server directory.

The examples presented below demonstrate using Apache. For information on configuring Nginx or IIS see the extended install page.

Note that while it’s common practice to make a new modsecurity.d folder, as outlined below, this isn’t strictly necessary. The path scheme outlined is common on RHEL-based operating systems; the Apache path used may need to be adjusted to match the server’s installation.

mkdir /etc/crs4

tar -xzvf v4.0.0.tar.gz --strip-components 1 -C /etc/crs4

Now all the CRS files will be located below the /etc/crs4 directory.

Setting Up the Main Configuration File

After extracting the rule set files, the next step is to set up the main OWASP CRS configuration file. An example configuration file is provided as part of the release package, located in the main directory: crs-setup.conf.example.

Note

Other aspects of ModSecurity, particularly engine-specific parameters, are controlled by the ModSecurity “recommended” configuration rules, modsecurity.conf-recommended. This file comes packaged with ModSecurity itself.

In many scenarios, the default example CRS configuration will be a good enough starting point. It is, however, a good idea to take the time to look through the example configuration file before deploying it to make sure it’s right for a given environment.

Warning

In particular, Response rules are enabled by default. You must be aware that you may be vulnerable to RFDoS attacks, depending on the responses your application is sending back to the client. You could be vulnerable, if your responses from your application can contain user input. If an attacker can submit user input that is returned as part of a response, the attacker can craft the input in such a way that the response rules of the WAF will block responses containing that input for all clients. For example, a blog post might no longer be accessible because of the contents of a comment on the post. See this blog post about the problems you could face.

There is an experimental scanner that uses nuclei to find out if are affected. So if

you are unsure, first test your application before enabling the response rules, or risk accidentally blocking some valid responses.

Response rules can be easily disabled by uncommenting the rule with id 900500 in the crs-setup.conf file,

since CRS version 4.10.0.

The CRS team believes that the damage that can be caused by webshells and information leakage outweighs the damage of RFDos attacks, in general. Thus, the response rules remain active in the default configuration for now.

Once any settings have been changed within the example configuration file, as needed, it should be renamed to remove the .example portion, like so:

cd /etc/crs4

mv crs-setup.conf.example crs-setup.conf

Include-ing the Rule Files

The last step is to tell the web server where the rules are. This is achieved by include-ing the rule configuration files in the httpd.conf file. Again, this example demonstrates using Apache, but the process is similar on other systems (see the extended install page for details).

echo 'IncludeOptional /etc/crs4/crs-setup.conf' >> /etc/httpd/conf/httpd.conf

echo 'IncludeOptional /etc/crs4/plugins/*-config.conf' >> /etc/httpd/conf/httpd.conf

echo 'IncludeOptional /etc/crs4/plugins/*-before.conf' >> /etc/httpd/conf/httpd.conf

echo 'IncludeOptional /etc/crs4/rules/*.conf' >> /etc/httpd/conf/httpd.conf

echo 'IncludeOptional /etc/crs4/plugins/*-after.conf' >> /etc/httpd/conf/httpd.conf

Now that everything has been configured, it should be possible to restart and begin using the OWASP CRS. The CRS rules typically require a bit of tuning with rule exclusions, depending on the site and web applications in question. For more information on tuning, see false positives and tuning.

systemctl restart httpd.service

Alternative: Using Containers

Another quick option is to use the official CRS pre-packaged containers. Docker, Podman, or any compatible container engine can be used. The official CRS images are published on Docker Hub and GitHub Container Repository. The image most often deployed is modsecurity-crs (owasp/modsecurity-crs from Docker Hub or ghcr.io/coreruleset/modsecurity-crs from GHCR): it already has everything needed to get up and running quickly.

The CRS project pre-packages both Apache and Nginx web servers along with the appropriate corresponding ModSecurity engine. More engines, like Coraza, will be added at a later date.

To protect a running web server, all that’s required is to get the appropriate image and set its configuration variables to make the WAF receives requests and proxies them to your backend server.

Below is an example docker compose file that can be used to pull the container images. If you don’t have compose installed, please read the installation instructions. All that needs to be changed is the BACKEND variable so that the WAF points to the backend server in question:

services:

modsec2-apache:

container_name: modsec2-apache

image: owasp/modsecurity-crs:apache

# if you are using Linux, you will need to uncomment the below line

# user: root

environment:

SERVERNAME: modsec2-apache

BACKEND: http://<backend server>

PORT: "80"

MODSEC_RULE_ENGINE: DetectionOnly

BLOCKING_PARANOIA: 2

TZ: "${TZ}"

ERRORLOG: "/var/log/error.log"

ACCESSLOG: "/var/log/access.log"

MODSEC_AUDIT_LOG_FORMAT: Native

MODSEC_AUDIT_LOG_TYPE: Serial

MODSEC_AUDIT_LOG: "/var/log/modsec_audit.log"

MODSEC_TMP_DIR: "/tmp"

MODSEC_RESP_BODY_ACCESS: "On"

MODSEC_RESP_BODY_MIMETYPE: "text/plain text/html text/xml application/json"

COMBINED_FILE_SIZES: "65535"

volumes:

ports:

- "80:80"

That’s all that needs to be done. Simply starting the container described above will instantly provide the protection of the latest stable CRS release in front of a given backend server or service. There are lots of additional variables that can be used to configure the container image and its behavior, so be sure to read the full documentation.

Verifying that the CRS is active

Always verify that CRS is installed correctly by sending a ‘malicious’ request to your site or application, for instance:

curl 'https://www.example.com/?foo=/etc/passwd&bar=/bin/sh'

Depending on your configurated thresholds, this should be detected as a malicious request. If you use blocking mode, you should receive an Error 403. The request should also be logged to the audit log, which is usually in /var/log/modsec_audit.log.

Upgrading

Upgrading from CRS 3.x to CRS 4

The most impactful change is the removal of application exclusion packages in favor of a plugin system. If you had activated the exclusion packages in CRS 3, you should download the plugins for them and place them in the plugins subdirectory. We maintain the list of plugins in our Plugin Registry. You can find detailed information on working with plugins in our plugins documentation.

In terms of changes to the detection rules, the amount of changes is smaller than in the CRS 2—3 changeover. Most rules have only evolved slightly, so it is recommended that you keep any existing custom exclusions that you have made under CRS 3.

We recommend to start over by copying our crs-setup.conf.example to crs-setup.conf with a copy of your old file at hand, and re-do the customizations that you had under CRS 3.

Please note that we added a large number of new detections, and any new detection brings a certain risk of false alarms. Therefore, we recommend to test first before going live.

Upgrading from CRS 2.x to CRS 3

In general, you can update by unzipping our new release over your older one, and updating the crs-setup.conf file with any new settings. However, CRS 3.0 is a major rewrite, incompatible with CRS 2.x. Key setup variables have changed their name, and new features have been introduced. Your former modsecurity_crs_10_setup.conf file is thus no longer usable. We recommend you to start with a fresh crs-setup.conf file from scratch.

Most rule IDs have been changed to reorganize them into logical sections. This means that if you have written custom configuration with exclusion rules (e.g. SecRuleRemoveById, SecRuleRemoveTargetById, ctl:ruleRemoveById or ctl:ruleRemoveTargetById) you must renumber the rule numbers in that configuration. You can do this using the supplied utility util/id_renumbering/update.py or find the changes in util/id_renumbering/IdNumbering.csv.

However, a key feature of the CRS 3 is the reduction of false positives in the default installation, and many of your old exclusion rules may no longer be necessary. Therefore, it is a good option to start fresh without your old exclusion rules.

If you are experienced in writing exclusion rules for CRS 2.x, it may be worthwhile to try running CRS 3 in Paranoia Level 2 (PL2). This is a stricter mode, which blocks additional attack patterns, but brings a higher number of false positives — in many situations the false positives will be comparable with CRS 2.x. This paranoia level however will bring you a higher protection level than CRS 2.x or a CRS 3 default install, so it can be worth the investment.

Extended Install

All the information needed to properly install CRS is presented on this page. The installation concepts are expanded upon and presented in more detail than the quick start guide.

To contact the CRS project with questions or problems, reach out via the project’s Google group or Slack channel (for Slack channel access, use this link to get an invite).

Prerequisites

Installing the CRS isn’t very difficult but does have one major requirement: a compatible engine. The reference engine used throughout this page is ModSecurity.

Note

In order to successfully run CRS 3.x using ModSecurity it is recommended to use the latest version available. For Nginx use the 3.x branch of ModSecurity, and for Apache use the latest 2.x branch.

Installing a Compatible WAF Engine

Two different methods to get an engine up and running are presented here:

- using the chosen engine as provided and packaged by the OS distribution

- compiling the chosen engine from source

A ModSecurity installation is presented in the examples below, however the install documentation for the Coraza engine can be found here.

Option 1: Installing Pre-Packaged ModSecurity

ModSecurity is frequently pre-packaged and is available from several major Linux distributions.

- Debian: Friends of the CRS project DigitalWave package and, most importantly, keep ModSecurity updated for Debian and derivatives.

- Fedora: Execute

dnf install mod_security for Apache + ModSecurity v2.

- RHEL compatible: Install EPEL and then execute

yum install mod_security.

For Windows, get the latest MSI package from https://github.com/owasp-modsecurity/ModSecurity/releases.

Warning

Distributions might not update their ModSecurity releases frequently.

As a result, it is quite likely that a distribution’s version of ModSecurity may be missing important features or may even contain security vulnerabilities. Additionally, depending on the package and package manager used, the ModSecurity configuration will be laid out slightly differently.

As the different engines and distributions have different layouts for their configuration, to simplify the documentation presented here the prefix <web server config>/ will be used from this point on.

Examples of <web server config>/ include:

/etc/apache2 in Debian and derivatives/etc/httpd in RHEL and derivatives/usr/local/apache2 if Apache was compiled from source using the default prefixC:\Program Files\ModSecurity IIS\ (or Program Files(x86), depending on configuration) on Windows/etc/nginx

Option 2: Compiling ModSecurity From Source

Compiling ModSecurity is easy, but slightly outside the scope of this document. For information on how to compile ModSecurity, refer to:

Unsupported Configurations

Note that the following configurations are not supported. They do not work as expected. The CRS project recommendation is to avoid these setups:

- Nginx with ModSecurity v2

- Apache with ModSecurity v3

Testing the Compiled Module

Once ModSecurity has been compiled, there is a simple test to see if the installation is working as expected. After compiling from source, use the appropriate directive to load the newly compiled module into the web server. For example:

- Apache:

LoadModule security2_module modules/mod_security2.so

- Nginx:

load_module modules/ngx_http_modsecurity_module.so;

Now restart the web server. ModSecurity should output that it’s being used.

Nginx should show something like:

2022/04/21 23:45:52 [notice] 1#1: ModSecurity-nginx v1.0.2 (rules loaded inline/local/remote: 0/6/0)

Apache should show something like:

[Thu Apr 21 23:55:35.142945 2022] [:notice] [pid 2528:tid 140410548673600] ModSecurity for Apache/2.9.3 (http://www.modsecurity.org/) configured.

[Thu Apr 21 23:55:35.142980 2022] [:notice] [pid 2528:tid 140410548673600] ModSecurity: APR compiled version="1.6.5"; loaded version="1.6.5"

[Thu Apr 21 23:55:35.142985 2022] [:notice] [pid 2528:tid 140410548673600] ModSecurity: PCRE compiled version="8.39 "; loaded version="8.39 2016-06-14"

[Thu Apr 21 23:55:35.142988 2022] [:notice] [pid 2528:tid 140410548673600] ModSecurity: LUA compiled version="Lua 5.1"

[Thu Apr 21 23:55:35.142991 2022] [:notice] [pid 2528:tid 140410548673600] ModSecurity: YAJL compiled version="2.1.0"

[Thu Apr 21 23:55:35.142994 2022] [:notice] [pid 2528:tid 140410548673600] ModSecurity: LIBXML compiled version="2.9.4"

[Thu Apr 21 23:55:35.142997 2022] [:notice] [pid 2528:tid 140410548673600] ModSecurity: Status engine is currently disabled, enable it by set SecStatusEngine to On.

[Thu Apr 21 23:55:35.187082 2022] [mpm_event:notice] [pid 2530:tid 140410548673600] AH00489: Apache/2.4.41 (Ubuntu) configured -- resuming normal operations

[Thu Apr 21 23:55:35.187125 2022] [core:notice] [pid 2530:tid 140410548673600] AH00094: Command line: '/usr/sbin/apache2'

Microsoft IIS with ModSecurity 2.x

The initial configuration file is modsecurity_iis.conf. This file will be parsed by ModSecurity for both ModSecurity directives and 'Include' directives.

Additionally, in the Event Viewer, under Windows Logs\Application, it should be possible to see a new log entry showing ModSecurity being successfully loaded.

At this stage, the ModSecurity on IIS setup is working and new directives can be placed in the configuration file as needed.

Downloading OWASP CRS

With a compatible WAF engine installed and working, the next step is typically to download and install the OWASP CRS. The CRS project strongly recommends using a supported version.

Official CRS releases can be found at the following URL: https://github.com/coreruleset/coreruleset/releases.

For production environments, it is recommended to use the latest release, which is v4.0.0. For testing the bleeding edge CRS version, nightly releases are also provided.

Verifying Releases

Note

Releases are signed using the CRS project’s GPG key (fingerprint: 3600 6F0E 0BA1 6783 2158 8211 38EE ACA1 AB8A 6E72). Releases can be verified using GPG/PGP compatible tooling.

To retrieve the CRS project’s public key from public key servers using gpg, execute: gpg --keyserver pgp.mit.edu --recv 0x38EEACA1AB8A6E72 (this ID should be equal to the last sixteen hex characters in the fingerprint).

It is also possible to use gpg --fetch-key https://coreruleset.org/security.asc to retrieve the key directly.

The following steps assume that a *nix operating system is being used. Installation is similar on Windows but likely involves using a zip file from the CRS releases page.

To download the release file and the corresponding signature:

wget https://github.com/coreruleset/coreruleset/archive/refs/tags/v4.0.0.tar.gz

wget https://github.com/coreruleset/coreruleset/releases/download/v4.0.0/coreruleset-4.0.0.tar.gz.asc

To verify the integrity of the release:

gpg --verify coreruleset-4.0.0.tar.gz.asc v4.0.0.tar.gz

gpg: Signature made Wed Jun 30 10:05:48 2021 -03

gpg: using RSA key 36006F0E0BA167832158821138EEACA1AB8A6E72

gpg: Good signature from "OWASP CRS <security@coreruleset.org>" [unknown]

gpg: WARNING: This key is not certified with a trusted signature!

gpg: There is no indication that the signature belongs to the owner.

Primary key fingerprint: 3600 6F0E 0BA1 6783 2158 8211 38EE ACA1 AB8A 6E72

If the signature was good then the verification succeeds. If a warning is displayed, like the above, it means the CRS project’s public key is known but is not trusted.

To trust the CRS project’s public key:

gpg --edit-key 36006F0E0BA167832158821138EEACA1AB8A6E72

gpg> trust

Your decision: 5 (ultimate trust)

Are you sure: Yes

gpg> quit

The result when verifying a release will then look like so:

gpg --verify coreruleset-4.0.0.tar.gz.asc v4.0.0.tar.gz

gpg: Signature made Wed Jun 30 15:05:48 2021 CEST

gpg: using RSA key 36006F0E0BA167832158821138EEACA1AB8A6E72

gpg: Good signature from "OWASP CRS <security@coreruleset.org>" [ultimate]

With the CRS release downloaded and verified, the rest of the set up can continue.

Setting Up OWASP CRS

OWASP CRS contains a setup file that should be reviewed prior to completing set up. The setup file is the only configuration file within the root ‘coreruleset-4.0.0’ folder and is named crs-setup.conf.example. Examining this configuration file and reading what the different options are is highly recommended.

At a minimum, keep in mind the following:

- CRS does not configure features such as the rule engine, audit engine, logging, etc. This task is part of the initial engine setup and is not a job for the rule set. For ModSecurity, if not already done, see the recommended configuration.

- Decide what ModSecurity should do when it detects malicious activity, e.g., drop the packet, return a 403 Forbidden status code, issue a redirect to a custom page, etc.

- Make sure to configure the anomaly scoring thresholds. For more information see Anomaly.

- By default, the CRS rules will consider many issues with different databases and languages. If running in a specific environment, e.g., without any SQL database services present, it is probably a good idea to limit this behavior for performance reasons.

- Make sure to add any HTTP methods, static resources, content types, or file extensions that are needed, beyond the default ones listed.

Once reviewed and configured, the CRS configuration file should be renamed by changing the file suffix from .example to .conf:

mv crs-setup.conf.example crs-setup.conf

In addition to crs-setup.conf.example, there are two other “.example” files within the CRS repository. These are:

rules/REQUEST-900-EXCLUSION-RULES-BEFORE-CRS.conf.examplerules/RESPONSE-999-EXCLUSION-RULES-AFTER-CRS.conf.example

These files are designed to provide the rule maintainer with the ability to modify rules (see false positives and tuning) without breaking forward compatibility with rule set updates. These two files should be renamed by removing the .example suffix. This will mean that installing updates will not overwrite custom rule exclusions. To rename the files in Linux, use a command similar to the following:

mv rules/REQUEST-900-EXCLUSION-RULES-BEFORE-CRS.conf.example rules/REQUEST-900-EXCLUSION-RULES-BEFORE-CRS.conf

mv rules/RESPONSE-999-EXCLUSION-RULES-AFTER-CRS.conf.example rules/RESPONSE-999-EXCLUSION-RULES-AFTER-CRS.conf

Proceeding with the Installation

The engine should support the Include directive out of the box. This directive tells the engine to parse additional files for directives. The question is where to put the CRS rules folder in order for it to be included.

Looking at the CRS files, there are quite a few “.conf” files. While the names attempt to do a good job at describing what each file does, additional information is available in the rules section.

Includes for Apache

It is recommended to create a folder specifically to contain the CRS rules. In the example presented here, a folder named modsecurity.d has been created and placed within the root <web server config>/ directory. When using Apache, wildcard notation can be used to vastly simplify the Include rules. Simply copying the cloned directory into the modsecurity.d folder and specifying the appropriate Include directives will install OWASP CRS. In the example below, the modsecurity.conf file has also been included, which includes recommended configurations for ModSecurity.

<IfModule security2_module>

Include modsecurity.d/modsecurity.conf

Include /etc/crs4/crs-setup.conf

Include /etc/crs4/plugins/*-config.conf

Include /etc/crs4/plugins/*-before.conf

Include /etc/crs4/rules/*.conf

Include /etc/crs4/plugins/*-after.conf

</IfModule>

Includes for Nginx

Nginx will include files from the Nginx configuration directory (/etc/nginx or /usr/local/nginx/conf/, depending on the environment). Because only one ModSecurityConfig directive can be specified within nginx.conf, it is recommended to name that file modsec_includes.conf and include additional files from there. In the example below, the cloned coreruleset folder was copied into the Nginx configuration directory. From there, the appropriate include directives are specified which will include OWASP CRS when the server is restarted. In the example below, the modsecurity.conf file has also been included, which includes recommended configurations for ModSecurity.

Include modsecurity.d/modsecurity.conf

Include /etc/crs4/crs-setup.conf

Include /etc/crs4/plugins/*-config.conf

Include /etc/crs4/plugins/*-before.conf

Include /etc/crs4/rules/*.conf

Include /etc/crs4/plugins/*-after.conf

Note

You will also need to include the plugins you want along with your CRS installation.

Using Containers

The CRS project maintains a set of ‘CRS with ModSecurity’ Docker images.

A full operational guide on how to use and deploy these images will be written in the future. In the meantime, refer to the GitHub README page for more information on how to use these official container images.

ModSecurity CRS Docker Image

https://github.com/coreruleset/modsecurity-crs-docker

A Docker image supporting the latest stable CRS release on:

- the latest stable ModSecurity v2 on Apache

- the latest stable ModSecurity v3 on Nginx

Engine and Integration Options

CRS runs on WAF engines that are compatible with a subset of ModSecurity’s SecLang configuration language. There are several options outside of ModSecurity itself, namely cloud offerings and content delivery network (CDN) services. There is also an open-source alternative to ModSecurity in the form of the new Coraza WAF engine.

Compatible Free and Open-Source WAF Engines

ModSecurity v2

ModSecurity v2, originally a security module for the Apache web server, is the reference implementation for CRS.

ModSecurity 2.9.x passes 100% of the CRS unit tests on the Apache platform.

When running ModSecurity, this is the option that is practically guaranteed to work with most documentation and know-how all around.

ModSecurity is released under the Apache License 2.0, and the project now lives under the OWASP Foundation umbrella.

There is a ModSecurity v2 / Apache Docker container which is maintained by the CRS project.

ModSecurity v3

ModSecurity v3, also known as libModSecurity, is a re-implementation of ModSecurity v3 with an architecture that is less dependent on the web server. The connection between the standalone ModSecurity and the web server is made using a lean connector module.

As of spring 2021, only the Nginx connector module is really usable in production.

ModSecurity v3 fails with 2-4% of the CRS unit tests due to bugs and implementation gaps. Nginx + ModSecurity v3 also suffers from performance problems when compared to the Apache + ModSecurity v2 platform. This may be surprising for people familiar with the high performance of Nginx.

ModSecurity v3 is used in production together with Nginx, but the CRS project recommends to use the ModSecurity v2 release line with Apache.

ModSecurity is released under the Apache License 2.0. It was primarily developed by Spiderlabs, an entity within the company Trustwave. In summer 2021, Trustwave announced their plans to end development of ModSecurity in 2024. On Jan 25th 2024, the project was transferred to the OWASP Foundation.

There is a ModSecurity v3 / Nginx Docker container which is maintained by the CRS project.

Coraza

OWASP Coraza WAF is meant to provide an open-source alternative to the two ModSecurity release lines.

Coraza passes 100% of the CRS v4 test suite and is thus fully compatible with CRS.

Coraza has been developed in Go and currently runs on the Caddy and Traefik platforms. Additional ports are being developed and the developers also seek to bring Coraza to Nginx and, eventually, Apache. In parallel to this expansion, Coraza will be developed further with its own feature set.

To learn more about CRS and Coraza, read this CRS blog post which introduces Coraza.

Commercial WAF Appliances

Dozens of commercial WAFs, both virtual and hardware-based, offer CRS as part of their service. Many of them use ModSecurity underneath, or some alternative implementation (although this is rare on the WAF appliance approach). Most of these commercial WAFs either don’t offer the full feature set of CRS or they don’t make it easily accessible. With some of these companies, there is often also a lack of CRS experience and knowledge.

The CRS project recommends evaluating these commercial appliance-based offerings in a holistic way before buying a license.

In light of the many, many appliance offerings on the market and the CRS project’s relatively limited exposure, only a few offerings are listed here.

HAProxy Technologies

HAProxy Technologies embeds ModSecurity v3 in three of its products via the Libmodsecurity module. ModSecurity is included with: HAProxy Enterprise, HAProxy ALOHA, and HAProxy Enterprise Kubernetes Ingress Controller.

To learn more, visit the HAProxy WAF solution page on haproxy.com.

There is also a Coraza SPOA solution that works with HAProxy.

Kemp/Progressive LoadMaster

The Kemp LoadMaster is a popular load balancer that integrates ModSecurity v2 and CRS in a typical way. It goes further than the competition with the support of most CRS features.

To learn more, read this blog post about CRS on LoadMaster.

Kemp/Progressive is a sponsor of CRS.

Loadbalancer.org

The load balancer appliance from Loadbalancer.org features WAF functionality based on Apache + ModSecurity v2 + CRS, sandwiched by HAProxy for load balancing. It’s available as a hardware, virtual, and cloud appliance.

To learn more, read this blog post about CRS at Loadbalancer.org.

VMware® Avi Load Balancer

VMware® Avi Load Balancer is a modern virtual load balancer and proxy with strong WAF capabilities.

To learn more, read VMware Avi Load Balancer WAF documentation

Existing CRS Integrations: Cloud and CDN Offerings

Most big cloud providers and CDNs provide a CRS offering. While originally these were mostly based on ModSecurity, over time they have all moved to alternative (usually proprietary) implementations of ModSecurity’s SecLang configuration language, or they transpose the CRS rules written in SecLang into their own domain specific language (DSL).

The CRS project has some insight into some of these platforms and is in touch with most of these providers. The exact specifics are not really known, however, but what is known is that almost all of these integrators compromised and provide a subset of CRS rules and a subset of features, in the interests of ease of integration and operation.

Info

The CRS Status page project will be testing cloud and CDN offerings. As part of this effort, the CRS project will be documenting the results and even publishing code on how to quickly get started using CRS in CDN/cloud providers. This status page project is in development as of spring 2022.

A selection of these platforms are listed below, along with links to get more info.

AWS WAF

Note

AWS provides a rule set called the “Core rule set (CRS) managed rule group” which “…provides protection against… commonly occurring vulnerabilities described in OWASP publications such as OWASP Top 10.”

The CRS project does not believe that the AWS WAF “core rule set” is based on or related to OWASP CRS.

Cloudflare WAF

Cloudflare WAF supports CRS as one of its WAF rule sets. Documentation on how to use it can be found in Cloudflare’s documentation.

Fastly

Fastly has offered CRS as part of their Fastly WAF for several years, but they have started to migrate their existing customers to the recently acquired Signal Sciences WAF. Interestingly, Fastly is transposing CRS rules into their own Varnish-based WAF engine. Unfortunately, documentation on their legacy WAF offering has been removed.

Google Cloud Armor

Google integrates CRS into its Cloud Armor WAF offering. Google runs the CRS rules on their own WAF engine. As of fall 2022, Google offers version 3.3.2 of CRS.

To learn more about CRS on Google’s Cloud Armor, read this document from Google.

Google Cloud Armor is a sponsor of CRS.

Microsoft Azure WAF

Azure Application Gateways can be configured to use the WAFv2 and managed rules with different versions of CRS. Azure provides the 3.2, 3.1, 3.0, and 2.2.9 CRS versions. We recommend using version 3.2 (see our security policy for details on supported CRS versions).

Oracle WAF

The Oracle WAF is a cloud-based offering that includes CRS. To learn more, read Oracle’s WAF documentation.

Alternative Use Cases

Outside of the narrower implementation of a WAF, CRS can also be found in different security-related setups.

Subsections of How CRS Works

Anomaly Scoring

CRS 3 is designed as an anomaly scoring rule set. This page explains what anomaly scoring is and how to use it.

Overview of Anomaly Scoring

Anomaly scoring, also known as “collaborative detection”, is a scoring mechanism used in CRS. It assigns a numeric score to HTTP transactions (requests and responses), representing how ‘anomalous’ they appear to be. Anomaly scores can then be used to make blocking decisions. The default CRS blocking policy, for example, is to block any transaction that meets or exceeds a defined anomaly score threshold.

How Anomaly Scoring Mode Works

Anomaly scoring mode combines the concepts of collaborative detection and delayed blocking. The key idea to understand is that the inspection/detection rule logic is decoupled from the blocking functionality.

Individual rules designed to detect specific types of attacks and malicious behavior are executed. If a rule matches, no immediate disruptive action is taken (e.g. the transaction is not blocked). Instead, the matched rule contributes to a transactional anomaly score, which acts as a running total. The rules just handle detection, adding to the anomaly score if they match. In addition, an individual matched rule will typically log a record of the match for later reference, including the ID of the matched rule, the data that caused the match, and the URI that was being requested.

Once all of the rules that inspect request data have been executed, blocking evaluation takes place. If the anomaly score is greater than or equal to the inbound anomaly score threshold then the transaction is denied. Transactions that are not denied continue on their journey.

Continuing on, once all of the rules that inspect response data have been executed, a second round of blocking evaluation takes place. If the outbound anomaly score is greater than or equal to the outbound anomaly score threshold, then the response is not returned to the user. (Note that in this case, the request is fully handled by the backend or application; only the response is stopped.)

Info

Having separate inbound and outbound anomaly scores and thresholds allows for request data and response data to be inspected and scored independently.

Summary of Anomaly Scoring Mode

To summarize, anomaly scoring mode in the CRS works like so:

- Execute all request rules

- Make a blocking decision using the inbound anomaly score threshold

- Execute all response rules

- Make a blocking decision using the outbound anomaly score threshold

The Anomaly Scoring Mechanism In Action

As described, individual rules are only responsible for detection and inspection: they do not block or deny transactions. If a rule matches then it increments the anomaly score. This is done using ModSecurity’s setvar action.

Below is an example of a detection rule which matches when a request has a Content-Length header field containing something other than digits. Notice the final line of the rule: it makes use of the setvar action, which will increment the anomaly score if the rule matches:

SecRule REQUEST_HEADERS:Content-Length "!@rx ^\d+$" \

"id:920160,\

phase:1,\

block,\

t:none,\

msg:'Content-Length HTTP header is not numeric',\

logdata:'%{MATCHED_VAR}',\

tag:'application-multi',\

tag:'language-multi',\

tag:'platform-multi',\

tag:'attack-protocol',\

tag:'paranoia-level/1',\

tag:'OWASP_CRS',\

tag:'capec/1000/210/272',\

ver:'OWASP_CRS/3.4.0-dev',\

severity:'CRITICAL',\

setvar:'tx.anomaly_score_pl1=+%{tx.critical_anomaly_score}'"

Info

Notice that the anomaly score variable name has the suffix pl1. Internally, CRS keeps track of anomaly scores on a per paranoia level basis. The individual paranoia level anomaly scores are added together before each round of blocking evaluation takes place, allowing the total combined inbound or outbound score to be compared to the relevant anomaly score threshold.

Tracking the anomaly score per paranoia level allows for clever scoring mechanisms to be employed, such as the executing paranoia level feature.

The rules files REQUEST-949-BLOCKING-EVALUATION.conf and RESPONSE-959-BLOCKING-EVALUATION.conf are responsible for executing the inbound (request) and outbound (response) rounds of blocking evaluation, respectively. The rules in these files calculate the total inbound or outbound transactional anomaly score and then make a blocking decision, by comparing the result to the defined threshold and taking blocking action if required.

Configuring Anomaly Scoring Mode

The following settings can be configured when using anomaly scoring mode:

- Anomaly score thresholds

- Severity levels

- Early blocking

If using a native CRS installation on a web application firewall, these settings are defined in the file crs-setup.conf. If running CRS where it has been integrated into a commercial product or CDN then support varies. Some vendors expose these settings in the GUI while other vendors require custom rules to be written which set the necessary variables. Unfortunately, there are also vendors that don’t allow these settings to be configured at all.

Anomaly Score Thresholds

An anomaly score threshold is the cumulative anomaly score at which an inbound request or an outbound response will be blocked.

Most detected inbound threats carry an anomaly score of 5 (by default), while smaller violations, e.g. protocol and standards violations, carry lower scores. An anomaly score threshold of 7, for example, would require multiple rule matches in order to trigger a block (e.g. one “critical” rule scoring 5 plus a lesser-scoring rule, in order to reach the threshold of 7). An anomaly score threshold of 10 would require at least two “critical” rules to match, or a combination of many lesser-scoring rules. Increasing the anomaly score thresholds makes the CRS less sensitive and hence less likely to block transactions.

Rule coverage should be taken into account when setting anomaly score thresholds. Different CRS rule categories feature different numbers of rules. SQL injection, for example, is covered by more than 50 rules. As a result, a real world SQLi attack can easily gain an anomaly score of 15, 20, or even more. On the other hand, a rare protocol attack might only be covered by a single, specific rule. If such an attack only causes the one specific rule to match then it will only gain an anomaly score of 5. If the inbound anomaly score threshold is set to anything higher than 5 then attacks like the one described will not be stopped. As such, a CRS installation should aim for an inbound anomaly score threshold of 5.

Warning

Increasing the anomaly score threshold above the defaults (5 for requests, 4 for responses), will allow a substantial number of attacks to bypass CRS and will impede the ability of critical rules to function correctly, such as LFI, RFI, RCE, and data-exfiltration rules. The anomaly score threshold should only ever be increased temporarily during false-positive tuning.

Some WAF vendors (such as Cloudflare) set the default anomaly score well above our defaults. This is not a proper implementation of CRS, and will result in bypasses.

Info

An outbound anomaly score threshold of 4 (the default) will block a transaction if any single response rule matches.

Tip

A common practice when working with a new CRS deployment is to start in blocking mode from the very beginning with very high anomaly score thresholds (even as high as 10000). The thresholds can be gradually lowered over time as an iterative process.

This tuning method was developed and advocated by Christian Folini, who documented it in detail, along with examples, in a popular tutorial titled Handling False Positives with OWASP CRS.

CRS uses two anomaly score thresholds, which can be defined using the variables listed below:

| Threshold |

Variable |

| Inbound anomaly score threshold |

tx.inbound_anomaly_score_threshold |

| Outbound anomaly score threshold |

tx.outbound_anomaly_score_threshold |

A simple way to set these thresholds is to uncomment and use rule 900110:

SecAction \

"id:900110,\

phase:1,\

nolog,\

pass,\

t:none,\

setvar:tx.inbound_anomaly_score_threshold=5,\

setvar:tx.outbound_anomaly_score_threshold=4"

Severity Levels

Each CRS rule has an associated severity level. Different severity levels have different anomaly scores associated with them. This means that different rules can increment the anomaly score by different amounts if the rules match.

The four severity levels and their default anomaly scores are:

| Severity Level |

Default Anomaly Score |

| CRITICAL |

5 |

| ERROR |

4 |

| WARNING |

3 |

| NOTICE |

2 |

For example, by default, a single matching CRITICAL rule would increase the anomaly score by 5, while a single matching WARNING rule would increase the anomaly score by 3.

The default anomaly scores are rarely ever changed. It is possible, however, to set custom anomaly scores for severity levels. To do so, uncomment rule 900100 and set the anomaly scores as desired:

SecAction \

"id:900100,\

phase:1,\

nolog,\

pass,\

t:none,\

setvar:tx.critical_anomaly_score=5,\

setvar:tx.error_anomaly_score=4,\

setvar:tx.warning_anomaly_score=3,\

setvar:tx.notice_anomaly_score=2"

Info

The CRS makes use of a ModSecurity feature called macro expansion to propagate the value of the severity level anomaly scores throughout the entire rule set.

Early Blocking

Early blocking is an optional setting which can be enabled to allow blocking decisions to be made earlier than usual.

As summarized previously, anomaly scoring mode works like so:

- Execute all request rules

- Make a blocking decision using the inbound anomaly score threshold

- Execute all response rules

- Make a blocking decision using the outbound anomaly score threshold

The early blocking option takes advantage of the fact that the request and response rules are actually split across different phases. A more detailed overview of anomaly scoring mode looks like so:

- Execute all phase 1 (request header) rules

- Execute all phase 2 (request body) rules

- Make a blocking decision using the inbound anomaly score threshold

- Execute all phase 3 (response header) rules

- Execute all phase 4 (response body) rules

- Make a blocking decision using the outbound anomaly score threshold

More data from a transaction becomes available for inspection in each subsequent processing phase. In phase 1 the request headers are available for inspection. Detection rules that are only concerned with request headers are executed here. In phase 2 the request body also becomes available for inspection. Rules that need to inspect the request body, perhaps in addition to request headers, are executed here.

If a transaction’s anomaly score already meets or exceeds the inbound anomaly score threshold by the end of phase 1 (due to causing phase 1 rules to match) then, in theory, the phase 2 rules don’t need to be executed. This saves the time and resources it would take to process the detection rules in phase 2 and also protects the server from being attacked when handling the body of the request. The majority of CRS rules take place in phase 2, which is also where the request body inspection rules are located. When dealing with large request bodies, it may be worthwhile to avoid executing the phase 2 rules in this way. The same logic applies to blocking responses that have already met the outbound anomaly score threshold in phase 3, before reaching phase 4. This saves the time and resources required to execute the phase 4 rules, which inspect the response body.

Early blocking makes this possible by inserting two additional rounds of blocking evaluation: one after the phase 1 detection rules have finished executing, and another after the phase 3 detection rules:

- Execute all phase 1 (request header) rules

- Make an early blocking decision using the inbound anomaly score threshold

- Execute all phase 2 (request body) rules

- Make a blocking decision using the inbound anomaly score threshold

- Execute all phase 3 (response header) rules

- Make an early blocking decision using the outbound anomaly score threshold

- Execute all phase 4 (response body) rules

- Make a blocking decision using the outbound anomaly score threshold

Info

More information about processing phases can be found in the processing phases section of the ModSecurity Reference Manual.

Warning

The early blocking option has a major drawback to be aware of: it can cause potential alerts to be hidden.

If a transaction is blocked early then its body is not inspected. For example, if a transaction is blocked early at the end of phase 1 (the request headers phase) then the body of the request is never inspected. If the early blocking option is not enabled, it’s possible that such a transaction would proceed to cause phase 2 rules to match. Early blocking hides these potential alerts. The same applies to responses that trigger an early block: it’s possible that some phase 4 rules would match if early blocking were not enabled.

Using the early blocking option results in having less information to work with, due to fewer rules being executed. This may mean that the full picture is not present in log files when looking back at attacks and malicious traffic. It can also be a problem when dealing with false positives: tuning away a false positive in phase 1 will allow the same request to proceed to the next phase the next time it’s issued (instead of being blocked at the end of phase 1). The problem is that now, with the request making it past phase 1, more, previously “hidden” false positives may appear in phase 2.

Warning

If early blocking is not enabled, there’s a chance that the web server will interfere with the handling of a request between phases 1 and 2. Take the example where the Apache web server issues a redirect to a new location. With a request that violates CRS rules in phase 1, this may mean that the request has a higher anomaly score than the defined threshold but it gets redirected away before blocking evaluation happens.

Enabling the Early Blocking Option

If using a native CRS installation on a web application firewall, the early blocking option can be enabled in the file crs-setup.conf. This is done by uncommenting rule 900120, which sets the variable tx.blocking_early to 1 in order to enable early blocking. CRS otherwise gives this variable a default value of 0, meaning that early blocking is disabled by default.

SecAction \

"id:900120,\

phase:1,\

nolog,\

pass,\

t:none,\

setvar:tx.blocking_early=1"

If running CRS where it has been integrated into a commercial product or CDN then support for the early blocking option varies. Some vendors may allow it to be enabled through the GUI, through a custom rule, or they might not allow it to be enabled at all.

Paranoia Levels

Paranoia levels are an essential concept when working with CRS. This page explains the concept behind paranoia levels and how to work with them on a practical level.

Introduction to Paranoia Levels

The paranoia level (PL) makes it possible to define how aggressive CRS is. Paranoia level 1 (PL 1) provides a set of rules that hardly ever trigger a false alarm (ideally never, but it can happen, depending on the local setup). PL 2 provides additional rules that detect more attacks (these rules operate in addition to the PL 1 rules), but there’s a chance that the additional rules will also trigger new false alarms over perfectly legitimate HTTP requests.

This continues at PL 3, where more rules are added, namely for certain specialized attacks. This leads to even more false alarms. Then at PL 4, the rules are so aggressive that they detect almost every possible attack, yet they also flag a lot of legitimate traffic as malicious.

A higher paranoia level makes it harder for an attacker to go undetected. Yet this comes at the cost of more false positives: more false alarms. That’s the downside to running a rule set that detects almost everything: your business / service / web application is also disrupted.

When false positives occur they need to be tuned away. In ModSecurity parlance: rule exclusions need to be written. A rule exclusion is a rule that disables another rule, either disabled completely or disabled partially only for certain parameters or for certain URIs. This means the rule set remains intact yet the CRS installation is no longer affected by the false positives.

Note

Depending on the complexity of the service (web application) in question and on the paranoia level, the process of writing rule exclusions can be a substantial amount of work.

This page won’t explore the problem of handling false positives further: for more information on this topic, see the appropriate chapter or refer to the tutorials at netnea.com.

Description of the Four Paranoia Levels

The CRS project views the four paranoia levels as follows:

| Paranoia Level |

Description |

| 1 |

Baseline security with a minimal need to tune away false positives. This is CRS for everybody running an HTTP server on the internet. Please report any false positives encountered with a PL 1 system via GitHub. |

| 2 |

Rules that are adequate when real user data is involved. Perhaps an off-the-shelf online shop. Expect to encounter false positives and learn how to tune them away. |

| 3 |

Online banking level security with lots of false positives. From a project perspective, false positives are accepted and expected here, so it’s important to learn how to write rule exclusions. |

| 4 |

Rules that are so strong (or paranoid) they’re adequate to protect the “crown jewels”. To be used at one’s own risk: be prepared to face a large number of false positives. |

Choosing an Appropriate Paranoia Level

It’s important to think about a service’s security requirements. The difference between protecting a personal website and the admin gateway controlling access to an enterprise’s Active Directory are very different. The paranoia level needs to be chosen accordingly, while also considering the resources (time) required to tune away false positives at higher paranoia levels.

Running at the highest paranoia level, PL 4, may seem appealing from a security standpoint, but it could take many weeks to tune away the false positives encountered. It is crucial to have enough time to fully deal with all false positives.

Warning

Failure to properly tune an installation runs the risk of exposing users to a vast number of false positives. This can lead to a poor user experience, and might ultimately lead to a decision to completely disable CRS. As such, setting a high PL in blocking mode without adequate tuning to deal with false positives is very risky.

If working in an enterprise environment, consider developing an internal policy to map the risk levels and security needs of different assets to the minimum acceptable paranoia level to be used for them, for example:

- Risk Class 0: No personal data involved → PL 1

- Risk Class 1: Personal data involved, e.g. names and addresses → PL 2

- Risk Class 2: Sensitive data involved, e.g. financial/banking data; highest risk class → PL 3

Setting the Paranoia Level

If using a native CRS installation on a web application firewall, the paranoia level is defined by setting the variable tx.paranoia_level in the file crs-setup.conf. This is done in rule 900000, but technically the variable can be set in the Apache or Nginx configuration instead.

If running CRS where it has been integrated into a commercial product or CDN then support varies. Some vendors expose the PL setting in the GUI while other vendors require a custom rule to be written that sets tx.paranoia_level. Unfortunately, there are also vendors that don’t allow the PL to be set at all. (The CRS project considers this to be an incomplete CRS integration, since paranoia levels are a defining feature of CRS.)

How Paranoia Levels Relate to Anomaly Scoring

It’s important to understand that paranoia levels and CRS anomaly scoring (the CRS anomaly threshold/limit) are two entirely different things with no direct connection. The paranoia level controls the number of rules that are enabled while the anomaly threshold defines how many rules can be triggered before a request is blocked.

At the conceptual level, these two ideas could be mixed if the goal was to create a particularly granular security concept. For example, saying “we define the anomaly threshold to be 10, but we compensate for this by running at paranoia level 3, which we acknowledge brings more rule alerts and higher anomaly scores.”

This is technically correct but it overlooks the fact that there are attack categories where CRS scores very low. For example, there is a plan to introduce a new rule to detect POP3 and IMAP injections: this will be a single rule, so, under normal circumstances, an IMAP injection would never score more than 5. Therefore, an installation running at an anomaly threshold of 10 could never block an IMAP injection, even if running at PL 3. In light of this, it’s generally advised to keep things simple and separate: a CRS installation should aim for an anomaly threshold of 5 and a paranoia level as deemed appropriate.

Moving to a Higher Paranoia Level

Introducing the Executing Paranoia Level

Consider an example successful CRS installation: it operates at paranoia level 1, a handful of rule exclusions are in place to deal with false positives, and the inbound anomaly score threshold is set to 5 which blocks would-be attackers immediately. Things are running smoothly at paranoia level 1, but imagine that there’s now a requirement to increase the level of security by raising the paranoia level to 2. Moving to PL 2 will almost certainly cause new false positives: given the strict anomaly score threshold of 5, these will likely cause legitimate users to be blocked.

There’s a simple, but risky, way to raise the paranoia level of a working and tuned CRS installation: raise the anomaly score threshold for a period of time, in order to account for the additional false positives that are anticipated. Raising the anomaly score threshold will allow through attacks that would have been blocked previously. The idea of decreasing security in order to improve it is counter-intuitive, as well as being bad practice.

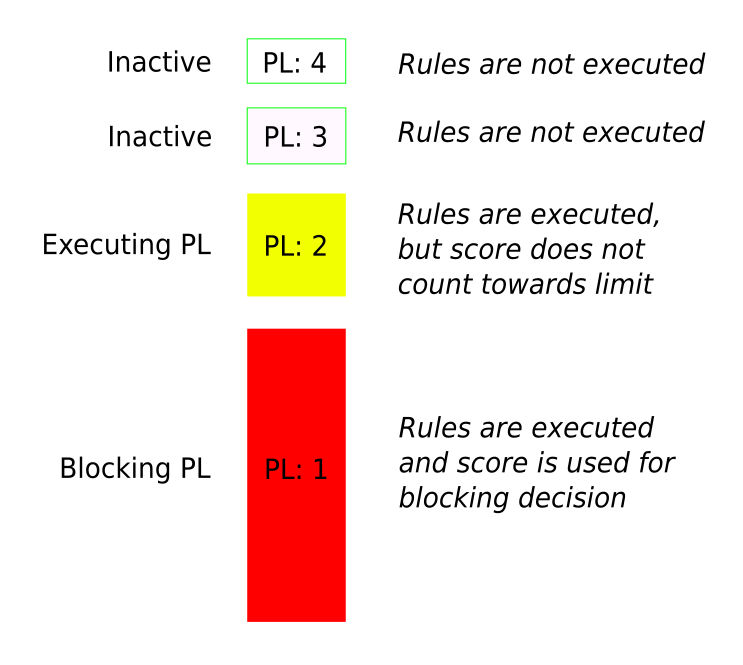

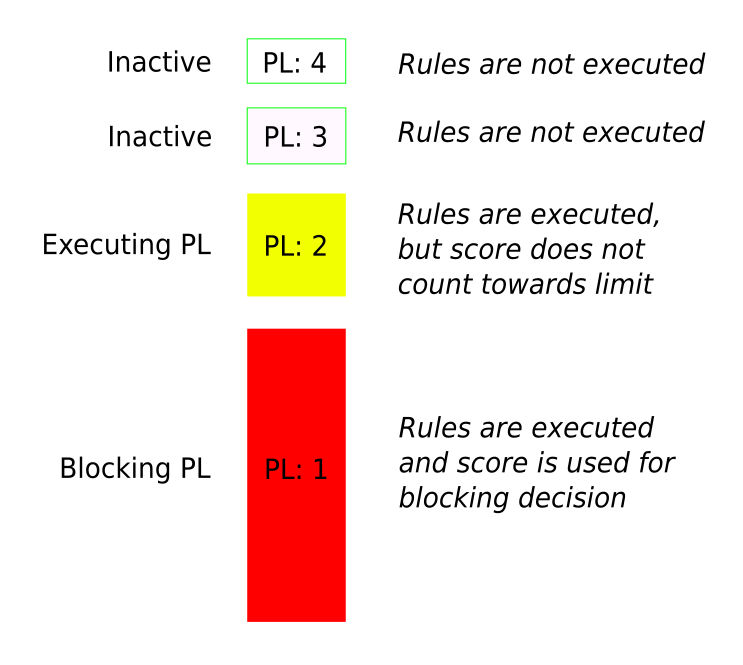

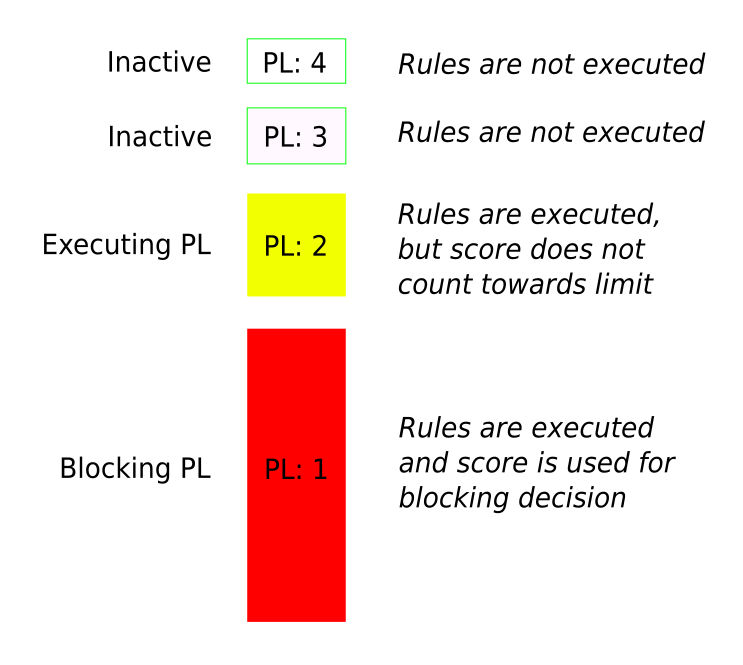

There is a better solution. First, think of the paranoia level as being the “blocking paranoia level”. The rules enabled in the blocking paranoia level count towards the anomaly score threshold, which is used to determine whether or not to block a given request. Now introduce an additional paranoia level: the “executing paranoia level”. By default, the executing paranoia level is automatically set to be equal to the blocking paranoia level. If, however, the executing paranoia level is set to be higher than the blocking paranoia level then the additional rules from the higher paranoia level are executed but will never count towards the anomaly score threshold used to make the blocking decision.

Example: Blocking paranoia level of 1 and executing paranoia level of 2

The executing paranoia level allows rules from a higher paranoia level to be run, and potentially to trigger false positives, without increasing the probability of blocking legitimate users. Any new false positives can then be tuned away using rule exclusions. Once ready and with all the new rule exclusions in place, the blocking paranoia level can then be raised to match the executing paranoia level. This approach is a flexible and secure way to raise the paranoia level on a working production system without the risk of new false positives blocking users in error.

Moving to a Lower Paranoia Level

It is always possible to lower the paranoia level in order to experience fewer false positives, or none at all. The way that the rule set is constructed, lowering the paranoia level always means fewer or no false positives; raising the paranoia level is very likely to introduce more false positives.

Further Reading

For a slightly longer explanation of paranoia levels, please refer to our blog post on the subject. The blog post also discusses the pros and cons of dynamically setting the paranoia level on a per-request basis, firstly by geolocation (i.e. a lower PL for domestic traffic and a higher PL for non-domestic traffic) and secondly based on previous behavior (i.e. a user is dealt with at PL 1, but if they ever trigger a rule then they’re handled at PL 2 for all future requests).

False Positives and Tuning

When a genuine transaction causes a rule from CRS to match in error it is described as a false positive. False positives need to be tuned away by writing rule exclusions, as this page explains.

What are False Positives?

CRS provides generic attack detection capabilities. A fresh CRS deployment has no awareness of the web services that may be running behind it, or the quirks of how those services work. It is possible that genuine transactions may cause some CRS rules to match in error, if the transactions happen to match one of the generic attack behaviors or patterns that are being detected. Such a match is referred to as a false positive, or false alarm.

False positives are particularly likely to happen when operating at higher paranoia levels. While paranoia level 1 is designed to cause few, ideally zero, false positives, higher paranoia levels are increasingly likely to cause false positives. Each successive paranoia level introduces additional rules, with higher paranoia levels adding more aggressive rules. As such, the higher the paranoia level is the more likely it is that false positives will occur. That is the cost of the higher security provided by higher paranoia levels: the additional time it takes to tune away the increasing number of false positives.

Example False Positive

Imagine deploying the CRS in front of a WordPress instance. The WordPress engine features the ability to add HTML to blog posts (as well as JavaScript, if you’re an administrator). Internally, WordPress has rules controlling which HTML tags are allowed to be used. This list of allowed tags has been studied heavily by the security community and it’s considered to be a secure mechanism.

Consider the CRS inspecting a request with a URL like the following:

www.example.com/?wp_post=<h1>Welcome+To+My+Blog</h1>

At paranoia level 2, the wp_post query string parameter would trigger a match against an XSS attack rule due to the presence of HTML tags. CRS is unaware that the problem is properly mitigated on the server side and, as a result, the request causes a false positive and may be blocked. The false positive may generate an error log line like the following:

[Wed Jan 01 00:00:00.123456 2022] [:error] [pid 2357:tid 140543564093184] [client 10.0.0.1:0] [client 10.0.0.1] ModSecurity: Warning. Pattern match "<(?:a|abbr|acronym|address|applet|area|audioscope|b|base|basefront|bdo|bgsound|big|blackface|blink|blockquote|body|bq|br|button|caption|center|cite|code|col|colgroup|comment|dd|del|dfn|dir|div|dl|dt|em|embed|fieldset|fn|font|form|frame|frameset|h1|head ..." at ARGS:wp_post. [file "/etc/crs/rules/REQUEST-941-APPLICATION-ATTACK-XSS.conf"] [line "783"] [id "941320"] [msg "Possible XSS Attack Detected - HTML Tag Handler"] [data "Matched Data: <h1> found within ARGS:wp_post: <h1>welcome to my blog</h1>"] [severity "CRITICAL"] [ver "OWASP_CRS/3.3.2"] [tag "application-multi"] [tag "language-multi"] [tag "platform-multi"] [tag "attack-xss"] [tag "OWASP_CRS"] [tag "capec/1000/152/242/63"] [tag "PCI/6.5.1"] [tag "paranoia-level/2"] [hostname "www.example.com"] [uri "/"] [unique_id "Yad-7q03dV56xYsnGhYJlQAAAAA"]

This example log entry provides lots of information about the rule match. Some of the key pieces of information are:

-

The message from ModSecurity, which explains what happened and where:

ModSecurity: Warning. Pattern match "<(?:a|abbr|acronym ..." at ARGS:wp_post.

-

The rule ID of the matched rule:

[id "941320"]

-

The additional matching data from the rule, which explains precisely what caused the rule match:

[data "Matched Data: <h1> found within ARGS:wp_post: <h1>welcome to my blog</h1>"]

Tip

CRS ships with a prebuilt rule exclusion package for WordPress, as well as other popular web applications, to help prevent false positives. See the section on rule exclusion packages for details.

Why are False Positives a Problem?

Alert Fatigue

If a system is prone to reporting false positives then the alerts it raises may be ignored. This may lead to real attacks being overlooked. For this reason, leaving false positives mixed in with real attacks is dangerous: the false positives should be resolved.

A false positive alert may contain sensitive information, for example usernames, passwords, and payment card data. Imagine a situation where a web application user has set their password to ‘/bin/bash’: without proper tuning, this input would cause a false positive every time the user logged in, writing the user’s password to the error log file in plaintext as part of the alert.

It’s also important to consider issues surrounding regulatory compliance. Data protection and privacy laws, like GDPR and CCPA, place strict duties and limitations on what information can be gathered and how that information is processed and stored. The unnecessary logging data generated by false positives can cause problems in this regard.

Poor User Experience

When working in strict blocking mode, false positives can cause legitimate user transactions to be blocked, leading to poor user experience. This can create pressure to disable the CRS or even to remove the WAF solution entirely, which is an unnecessary sacrifice of security for usability. The correct solution to this problem is to tune away the false positives so that they don’t reoccur in the future.

Tuning Away False Positives

Directly Modifying CRS Rules

Warning

Making direct modifications to CRS rule files is a bad idea and is strongly discouraged.

It may seem logical to prevent false positives by modifying the offending CRS rules. If a detection pattern in a CRS rule is causing matches with genuine transactions then the pattern could be modified. This is a bad idea.

Directly modifying CRS rules essentially creates a fork of the rule set. Any modifications made would be undone by a rule set update, meaning that any changes would need to be continually reapplied by hand. This is a tedious, time consuming, and error-prone solution.

There are alternative ways to deal with false positives, as described below. These methods sometimes require slightly more effort and knowledge but they do not cause problems when performing rule set updates.

Rule Exclusions

Overview

The ModSecurity WAF engine has flexible ways to tune away false positives. It provides several rule exclusion (RE) mechanisms which allow rules to be modified without directly changing the rules themselves. This makes it possible to work with third-party rule sets, like CRS, by adapting rules as needed while leaving the rule set files intact and unmodified. This allows for easy rule set updates.

Two fundamentally different types of rule exclusions are supported:

-

Configure-time rule exclusions: Rule exclusions that are applied once, at configure-time (e.g. when (re)starting or reloading ModSecurity, or the server process that holds it). For example: “remove rule X at startup and never execute it.”

This type of rule exclusion takes the form of a ModSecurity directive, e.g. SecRuleRemoveById.

-

Runtime rule exclusions: Rule exclusions that are applied at runtime on a per-transaction basis (e.g. exclusions that can be conditionally applied to some transactions but not others). For example: “if a transaction is a POST request to the location ’login.php’, remove rule X.”

This type of rule exclusion takes the form of a SecRule.

Info

Runtime rule exclusions, while granular and flexible, have a computational overhead, albeit a small one. A runtime rule exclusion is an extra SecRule which must be evaluated for every transaction.

In addition to the two types of exclusions, rules can be excluded in two different ways:

- Exclude the entire rule/tag: An entire rule, or entire category of rules (by specifying a tag), is removed and will not be executed by the rule engine.

- Exclude a specific variable from the rule/tag: A specific variable will be excluded from a specific rule, or excluded from a category of rules (by specifying a tag).

These two methods can also operate on multiple individual rules, or even entire rule categories (identified either by tag or by using a range of rule IDs).

The combinations of rule exclusion types and methods allow for writing rule exclusions of varying granularity. Very coarse rule exclusions can be written, for example “remove all SQL injection rules” using SecRuleRemoveByTag. Extremely granular rule exclusions can also be written, for example “for transactions to the location ‘web_app_2/function.php’, exclude the query string parameter ‘user_id’ from rule 920280” using a SecRule and the action ctl:ruleRemoveTargetById.

The different rule exclusion types and methods are summarized in the table below, which presents the main ModSecurity directives and actions that can be used for each type and method of rule exclusion:

|

Exclude entire rule/tag |

Exclude specific variable from rule/tag |

| Configure-time |

SecRuleRemoveById* SecRuleRemoveByTag |

SecRuleUpdateTargetById SecRuleUpdateTargetByTag |

| Runtime |

ctl:ruleRemoveById** ctl:ruleRemoveByTag |

ctl:ruleRemoveTargetById ctl:ruleRemoveTargetByTag |

*Can also exclude ranges of rules or multiple space separated rules.

**Can also exclude ranges of rules (not currently supported in ModSecurity v3).

Tip

This table is available as a well presented, downloadable Rule Exclusion Cheatsheet from Christian Folini.

Note

When using SecRuleUpdateTargetById and ctl:ruleRemoveTargetById with chained rules, target exclusions are only applied to the first rule in the chain. You can’t exclude targets from other rules in the chain, depending on how the rule is written, you may have to remove the entire rule using SecRuleRemoveById or ctl:ruleRemoveById. This is a current limitation of the SecLang configuration language.

Note

There’s also a third group of rule exclusion directives and actions, the use of which is discouraged. As well as excluding rules “ById” and “ByTag”, it’s also possible to exclude “ByMsg” (SecRuleRemoveByMsg, SecRuleUpdateTargetByMsg, ctl:ruleRemoveByMsg, and ctl:ruleRemoveTargetByMsg). This excludes rules based on the message they write to the error log. These messages can be dynamic and may contain special characters. As such, trying to exclude rules by message is difficult and error-prone.

Tip

When creating a runtime rule exclusion, we recommend specifying the t:none transformation to ensure you have full control over the behavior of an rule. See our docs on rule creation to get an overview on how a runtime rule works: https://coreruleset.org/docs/3-about-rules/creating/

CRS rules typically feature multiple tags, grouping them into different categories. For example, a rule might be tagged by attack type (‘attack-rce’, ‘attack-xss’, etc.), by language (’language-java’, ’language-php’, etc.), and by platform (‘platform-apache’, ‘platform-unix’, etc.).

Tags can be used to remove or modify entire categories of rules all at once, but some tags are more useful than others in this regard. Tags for specific attack types, languages, and platforms may be useful for writing rule exclusions. For example, if lots of the SQL injection rules are causing false positives but SQL isn’t in use anywhere in the back end web application then it may be worthwhile to remove all CRS rules tagged with ‘attack-sqli’ (SecRuleRemoveByTag attack-sqli).

Some rule tags are not useful for rule exclusion purposes. For example, there are generic tags like ’language-multi’ and ‘platform-multi’: these contain hundreds of rules across the entire CRS, and they don’t represent a meaningful rule property to be useful in rule exclusions. There are also tags that categorize rules based on well known security standards, like CAPEC and PCI DSS (e.g. ‘capec/1000/153/267’, ‘PCI/6.5.4’). These tags may be useful for informational and reporting purposes but are not useful in the context of writing rule exclusions.

Excluding rules using tags may be more useful than excluding using rule ranges in situations where a category of rules is spread across multiple files. For example, the ’language-php’ rules are spread across several different rule files (both inbound and outbound rule files).

Rule Ranges

As well as rules being tagged using different categories, CRS rules are organized into files by general category. In addition, CRS rule IDs follow a consistent numbering convention. This makes it easy to remove unwanted types of rules by removing ranges of rule IDs. For example, the file REQUEST-913-SCANNER-DETECTION.conf contains rules related to detecting well known scanners and crawlers, which all have rule IDs in the range 913000-913999. All of the rules in this file can be easily removed using a configure-time rule exclusion, like so:

SecRuleRemoveById "913000-913999"

Excluding rules using rule ranges may be more useful than excluding using tags in situations where tags are less relevant or where tags vary across the rules in question. For example, a rule range may be the most appropriate solution if the goal is to remove all rules contained in a single file, regardless of how the rules are tagged.

Support for Regular Expressions

Most of the configure-time rule exclusion directives feature some level of support for using regular expressions. This makes it possible, for example, to exclude a dynamically named variable from a rule. The directives with support for regular expressions are:

-

SecRuleRemoveByTag

A regular expression is used for the tag match. For example, SecRuleRemoveByTag "injection" would match both “attack-injection-generic” and “attack-injection-php”.

-

SecRuleRemoveByMsg

A regular expression is used for the message match. For example, SecRuleRemoveByMsg "File Access" would match both “OS File Access Attempt” and “Restricted File Access Attempt”.

-

SecRuleUpdateTargetById, SecRuleUpdateTargetByTag, SecRuleUpdateTargetByMsg

A regular expression can optionally be used in the target specification by enclosing the regular expression in forward slashes. This is useful for dealing with dynamically named variables, like so:

SecRuleUpdateTargetById 942440 "!REQUEST_COOKIES:/^uid_.*/".

This example would exclude request cookies named “uid_0123456”, “uid_6543210”, etc. from rule 942440.

Note

The ‘ctl’ action for writing runtime rule exclusions does not support any use of regular expressions. This is a known limitation of the ModSecurity rule engine.

Placement of Rule Exclusions

It is crucial to put rule exclusions in the correct place, otherwise they may not work.

-

Configure-time rule exclusions: These must be placed after the CRS has been included in a configuration. For example:

# Include CRS

Include crs/rules/*.conf

# Configure-time rule exclusions

...

Configure-time rule exclusions remove rules. A rule must already be defined before it can be removed (something cannot be removed if it doesn’t yet exist). As such, this type of rule exclusion must appear after the CRS and all its rules have been included.

-

Runtime rule exclusions: These must be placed before the CRS has been included in a configuration. For example:

# Runtime rule exclusions

...

# Include CRS

Include crs/rules/*.conf

Runtime rule exclusions modify rules in some way. If a rule is to be modified then this should occur before the rule is executed (modifying a rule after it has been executed has no effect). As such, this type of rule exclusion must appear before the CRS and all its rules have been included.

Tip

CRS ships with the files REQUEST-900-EXCLUSION-RULES-BEFORE-CRS.conf.example and RESPONSE-999-EXCLUSION-RULES-AFTER-CRS.conf.example. After dropping the “.example” suffix, these files can be used to house “BEFORE-CRS” (i.e. runtime) and “AFTER-CRS” (i.e. configure-time) rule exclusions in their correct places relative to the CRS rules. These files also contain example rule exclusions to copy and learn from.

Example 1 (SecRuleRemoveById)

(Configure-time RE. Exclude entire rule.)

Scenario: Rule 933151, “PHP Injection Attack: Medium-Risk PHP Function Name Found”, is causing false positives. The web application behind the WAF makes no use of PHP. As such, it is deemed safe to tune away this false positive by completely removing rule 933151.

Rule Exclusion:

# CRS Rule Exclusion: 933151 - PHP Injection Attack: Medium-Risk PHP Function Name Found

SecRuleRemoveById 933151

Example 2 (SecRuleRemoveByTag)

(Configure-time RE. Exclude entire tag.)

Scenario: Several different parts of a web application are causing false positives with various SQL injection rules. None of the web services behind the WAF make use of SQL, so it is deemed safe to tune away these false positives by removing all the SQLi detection rules.

Rule Exclusion:

# CRS Rule Exclusion: Remove all SQLi detection rules

SecRuleRemoveByTag attack-sqli

Example 3 (SecRuleUpdateTargetById)

(Configure-time RE. Exclude specific variable from rule.)

Scenario: The content of a POST body parameter named ‘wp_post’ is causing false positives with rule 941320, “Possible XSS Attack Detected - HTML Tag Handler”. Removing this rule entirely is deemed to be unacceptable: the rule is not causing any other issues, and the protection it provides should be retained for everything apart from ‘wp_post’. It is decided to tune away this false positive by excluding ‘wp_post’ from rule 941320.

Rule Exclusion:

# CRS Rule Exclusion: 941320 - Possible XSS Attack Detected - HTML Tag Handler

SecRuleUpdateTargetById 941320 "!ARGS:wp_post"

Example 4 (SecRuleUpdateTargetByTag)

(Configure-time RE. Exclude specific variable from rule.)

Scenario: The values of request cookies with random names of the form ‘uid_<STRING>’ are causing false positives with various SQL injection rules. It is decided that it is not a risk to allow SQL-like content in cookie values, however it is deemed unacceptable to disable the SQLi detection rules for anything apart from the request cookies in question. It is decided to tune away these false positives by excluding only the problematic request cookies from the SQLi detection rules. A regular expression is to be used to handle the random string portion of the cookie names.

Rule Exclusion:

# CRS Rule Exclusion: Exclude the request cookies 'uid_<STRING>' from the SQLi detection rules

SecRuleUpdateTargetByTag attack-sqli "!REQUEST_COOKIES:/^uid_.*/"

Example 5 (ctl:ruleRemoveById)

(Runtime RE. Exclude entire rule.)

Scenario: Rule 920230, “Multiple URL Encoding Detected”, is causing false positives at the specific location ‘/webapp/function.php’. This is being caused by a known quirk in how the web application has been written, and it cannot be fixed in the application. It is deemed safe to tune away this false positive by removing rule 920230 for that specific location only.

Rule Exclusion:

# CRS Rule Exclusion: 920230 - Multiple URL Encoding Detected

SecRule REQUEST_URI "@beginsWith /webapp/function.php" \

"id:1000,\

phase:1,\

pass,\

t:none,\

nolog,\

ctl:ruleRemoveById=920230"

Example 6 (ctl:ruleRemoveByTag)

(Runtime RE. Exclude entire tag.)